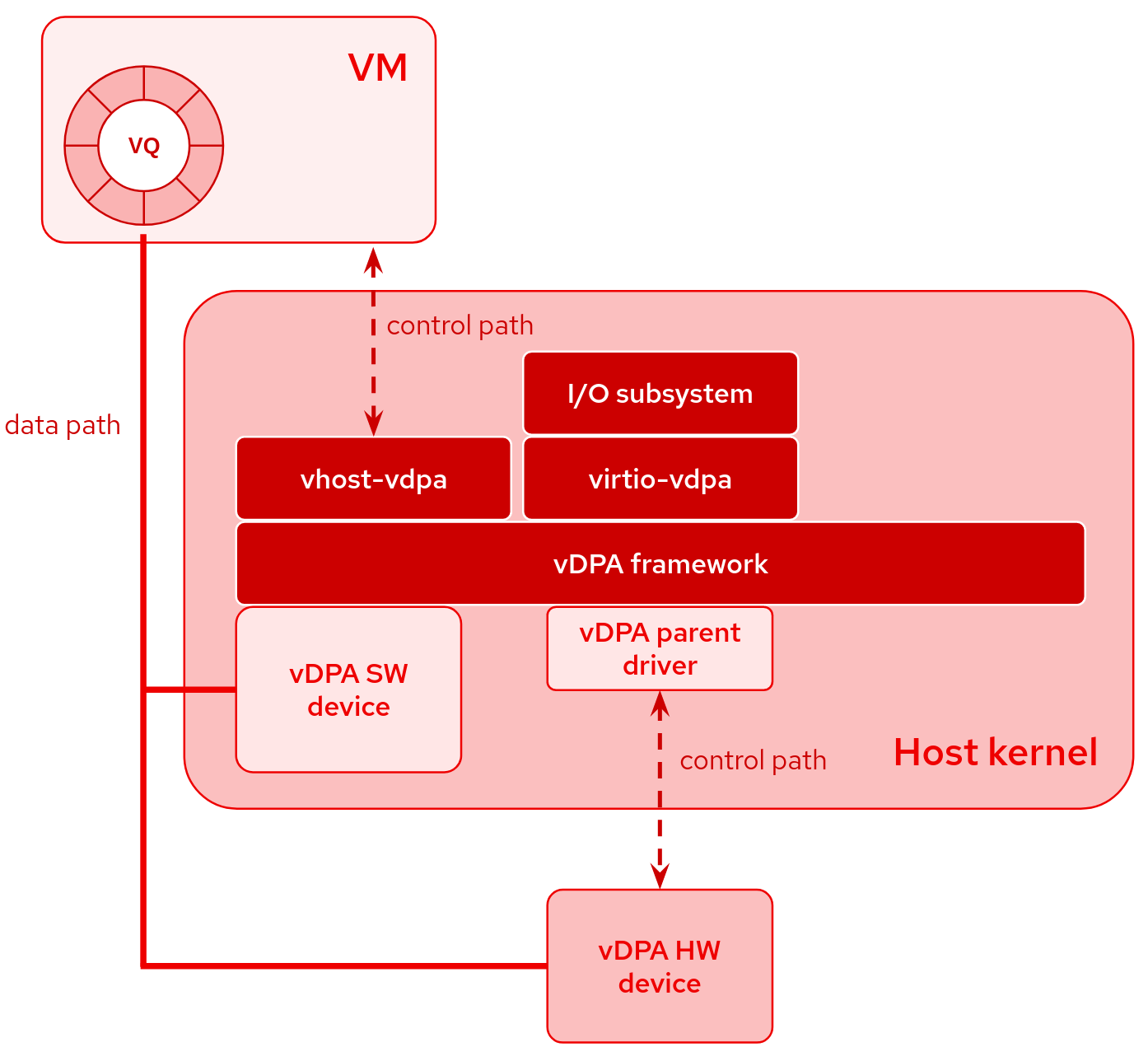

A vDPA device is a type of device that follows the virtio specification for its datapath but has a vendor-specific control path.

vDPA devices can be both physically located on the hardware or emulated by software.

A small vDPA parent driver in the host kernel is required only for the control path. The main advantage is the unified software stack for all vDPA devices:

- vhost interface (vhost-vdpa) for userspace or guest virtio driver, like a VM running in QEMU

- virtio interface (virtio-vdpa) for bare-metal or containerized applications running in the host

- management interface (vdpa netlink) for instantiating devices and configuring virtio parameters

Useful Resources

Many blog posts and talks have been published in recent years that can help you better understand vDPA and the use cases. On vdpa-dev.gitlab.io we collected some of them; I suggest you at least explore the following:

Block devices

Most of the work in vDPA has been driven by network devices, but in recent years, we have also developed support for block devices.

The main use case is definitely leveraging the hardware to directly emulate the virtio-blk device and support different network backends such as Ceph RBD or iSCSI. This is the goal of some SmartNICs or DPUs, which are able to emulate virtio-net devices of course, but also virtio-blk for network storage.

The abstraction provided by vDPA also makes software accelerators possible, similar to existing vhost or vhost-user devices. We discussed about that at KVM Forum 2021.

We talked about the fast path and the slow path in that talk. When QEMU needs to handle requests, like supporting live migration or executing I/O throttling, it uses the slow path. During the slow path, the device exposed to the guest is emulated in QEMU. QEMU intercepts the requests and forwards them to the vDPA device by taking advantage of the driver implemented in libblkio. On the other hand, when QEMU doesn’t need to intervene, the fast path comes into play. In this case, the vDPA device can be directly exposed to the guest, bypassing QEMU’s emulation.

libblkio exposes common API for accessing

block devices in userspace. It supports several drivers. We will focus more

on virtio-blk-vhost-vdpa driver, which is used by virtio-blk-vhost-vdpa

block device in QEMU. It only supports slow path for now, but in the future

it should be able to switch to fast path automatically. Since QEMU 7.2, it

supports libblkio drivers, so you can use the following options to attach a

vDPA block device to a VM:

-blockdev node-name=drive_src1,driver=virtio-blk-vhost-vdpa,path=/dev/vhost-vdpa-0,cache.direct=on \

-device virtio-blk-pci,id=src1,bootindex=2,drive=drive_src1 \

Anyway, to fully leverage the performance of a vDPA hardware device, we can

always use the generic vhost-vdpa-device-pci device offered by QEMU that

supports any vDPA device and exposes it directly to the guest. Of course,

QEMU is not able to intercept requests in this scenario and therefore some

features offered by its block layer (e.g. live migration, disk format, etc.)

are not supported. Since QEMU 8.0, you can use the following options to attach

a generic vDPA device to a VM:

-device vhost-vdpa-device-pci,vhostdev=/dev/vhost-vdpa-0

At KVM Forum 2022, Alberto Faria and Stefan Hajnoczi introduced libblkio, while Kevin Wolf and I discussed its usage in the QEMU Storage Deamon (QSD).

Software devices

One of the significant benefits of vDPA is its strong abstraction, enabling the implementation of virtio devices in both hardware and software—whether in the kernel or user space. This unification under a single framework, where devices appear identical for QEMU facilitates the seamless integration of hardware and software components.

Kernel devices

Regarding in-kernel devices, starting from Linux v5.13, there exists a simple

simulator designed for development and debugging purposes. It is available

through the vdpa-sim-blk kernel module, which emulates a 128 MB ramdisk.

As highlighted in the presentation at KVM Forum 2021, a future device in the

kernel (similar to the repeatedly proposed but never merged vhost-blk)

could potentially offer excellent performance. Such a device could be used

as an alternative when hardware is unavailable, for instance, facilitating

live migration in any system, regardless of whether the destination system

features a SmartNIC/DPU or not.

User space devices

Instead, regarding user space, we can use VDUSE. QSD supports it and thus allows us to export any disk image supported by QEMU, such as a vDPA device in this way:

qemu-storage-daemon \

--blockdev file,filename=/path/to/disk.qcow2,node-name=file \

--blockdev qcow2,file=file,node-name=qcow2 \

--export type=vduse-blk,id=vduse0,name=vduse0,node-name=qcow2,writable=on

Containers, VMs, or bare-metal

As mentioned in the introduction, vDPA supports different buses such as

vhost-vdpa and virtio-vdpa. This flexibility enables the utilization of

vDPA devices with virtual machines or user space drivers (e.g., libblkio)

through the vhost-vdpa bus. Additionally, it allows interaction with

applications running directly on the host or within containers via the

virtio-vdpa bus.

The vdpa tool in iproute2 facilitates the management of vdpa devices

through netlink, enabling the allocation and deallocation of these devices.

Starting with Linux 5.17, vDPA drivers support driver_ovveride. This

enhancement allows dynamic reconfiguration during runtime, permitting the

migration of a device from one bus to another in this way:

# load vdpa buses

$ modprobe -a virtio-vdpa vhost-vdpa

# load vdpa-blk in-kernel simulator

$ modprobe vdpa-sim-blk

# instantiate a new vdpasim_blk device called `vdpa0`

$ vdpa dev add mgmtdev vdpasim_blk name vdpa0

# `vdpa0` is attached to the first vDPA bus driver loaded

$ driverctl -b vdpa list-devices

vdpa0 virtio_vdpa

# change the `vdpa0` bus to `vhost-vdpa`

$ driverctl -b vdpa set-override vdpa0 vhost_vdpa

# `vdpa0` is now attached to the `vhost-vdpa` bus

$ driverctl -b vdpa list-devices

vdpa0 vhost_vdpa [*]

# Note: driverctl(8) integrates with udev so the binding is preserved.

Examples

Below are several examples on how to use VDUSE and the QEMU Storage Daemon

with VMs (QEMU) or Containers (podman).

These steps are easily adaptable to any hardware that supports virtio-blk

devices via vDPA.

qcow2 image available for host applications and containers

# load vdpa buses

$ modprobe -a virtio-vdpa vhost-vdpa

# create an empty qcow2 image

$ qemu-img create -f qcow2 test.qcow2 10G

# load vduse kernel module

$ modprobe vduse

# launch QSD exposing the `test.qcow2` image as `vduse0` vDPA device

$ qemu-storage-daemon --blockdev file,filename=test.qcow2,node-name=file \

--blockdev qcow2,file=file,node-name=qcow2 \

--export vduse-blk,id=vduse0,name=vduse0,num-queues=1,node-name=qcow2,writable=on &

# instantiate the `vduse0` device (same name used in QSD)

$ vdpa dev add name vduse0 mgmtdev vduse

# be sure to attach it to the `virtio-vdpa` device to use with host applications

$ driverctl -b vdpa set-override vduse0 virtio_vdpa

# device exposed as a virtio device, but attached to the host kernel

$ lsblk -pv

NAME TYPE TRAN SIZE RQ-SIZE MQ

/dev/vda disk virtio 10G 256 1

# start a container with `/dev/vda` attached

podman run -it --rm --device /dev/vda --group-add keep-groups fedora:39 bash

Launch a VM using a vDPA device

# download Fedora cloud image (or use any other bootable image you want)

$ wget https://download.fedoraproject.org/pub/fedora/linux/releases/39/Cloud/x86_64/images/Fedora-Cloud-Base-39-1.5.x86_64.qcow2

# launch QSD exposing the VM image as `vduse1` vDPA device

$ qemu-storage-daemon \

--blockdev file,filename=Fedora-Cloud-Base-39-1.5.x86_64.qcow2,node-name=file \

--blockdev qcow2,file=file,node-name=qcow2 \

--export vduse-blk,id=vduse1,name=vduse1,num-queues=1,node-name=qcow2,writable=on &

# instantiate the `vduse1` device (same name used in QSD)

$ vdpa dev add name vduse1 mgmtdev vduse

# initially it's attached to the host (`/dev/vdb`), because `virtio-vdpa`

# is the first kernel module we loaded

$ lsblk -pv

NAME TYPE TRAN SIZE RQ-SIZE MQ

/dev/vda disk virtio 10G 256 1

/dev/vdb disk virtio 5G 256 1

$ lsblk /dev/vdb

NAME MAJ:MIN RM SIZE RO TYPE MOUNTPOINTS

vdb 251:16 0 5G 0 disk

├─vdb1 251:17 0 1M 0 part

├─vdb2 251:18 0 1000M 0 part

├─vdb3 251:19 0 100M 0 part

├─vdb4 251:20 0 4M 0 part

└─vdb5 251:21 0 3.9G 0 part

# and it is identified as `virtio1` in the host

$ ls /sys/bus/vdpa/devices/vduse1/

driver driver_override power subsystem uevent virtio1

# attach it to the `vhost-vdpa` device to use the device with VMs

$ driverctl -b vdpa set-override vduse1 vhost_vdpa

# `/dev/vdb` is not available anymore

$ lsblk -pv

NAME TYPE TRAN SIZE RQ-SIZE MQ

/dev/vda disk virtio 10G 256 1

# the device is identified as `vhost-vdpa-1` in the host

$ ls /sys/bus/vdpa/devices/vduse1/

driver driver_override power subsystem uevent vhost-vdpa-1

$ ls -l /dev/vhost-vdpa-1

crw-------. 1 root root 511, 0 Feb 12 17:58 /dev/vhost-vdpa-1

# launch QEMU using `/dev/vhost-vdpa-1` device with the

# `virtio-blk-vhost-vdpa` libblkio driver

$ qemu-system-x86_64 -m 512M -smp 2 -M q35,accel=kvm,memory-backend=mem \

-object memory-backend-memfd,share=on,id=mem,size="512M" \

-blockdev node-name=drive0,driver=virtio-blk-vhost-vdpa,path=/dev/vhost-vdpa-1,cache.direct=on \

-device virtio-blk-pci,drive=drive0

# `virtio-blk-vhost-vdpa` blockdev can be used with any QEMU block layer

# features (e.g live migration, I/O throttling).

# In this example we are using I/O throttling:

$ qemu-system-x86_64 -m 512M -smp 2 -M q35,accel=kvm,memory-backend=mem \

-object memory-backend-memfd,share=on,id=mem,size="512M" \

-blockdev node-name=drive0,driver=virtio-blk-vhost-vdpa,path=/dev/vhost-vdpa-1,cache.direct=on \

-blockdev node-name=throttle0,driver=throttle,file=drive0,throttle-group=limits0 \

-object throttle-group,id=limits0,x-iops-total=2000 \

-device virtio-blk-pci,drive=throttle0

# Alternatively, we can use the generic `vhost-vdpa-device-pci` to take

# advantage of all the performance, but without having any QEMU block layer

# features available

$ qemu-system-x86_64 -m 512M -smp 2 -M q35,accel=kvm,memory-backend=mem \

-object memory-backend-memfd,share=on,id=mem,size="512M" \

-device vhost-vdpa-device-pci,vhostdev=/dev/vhost-vdpa-0