During the last KVM Forum 2019, we discussed some next steps and several requests came from the audience.

In the last months, we worked on that and recent Linux releases contain interesting new features that we will describe in this blog post:

- Nested VMs support, available in Linux 5.5

- Local communication support, available in Linux 5.6

DevConf.CZ 2020

These updates and an introduction to AF_VSOCK were presented at DevConf.CZ 2020 during the “VSOCK: VM↔host socket with minimal configuration” talk. Slides and recording are available.

Nested VMs

Before Linux 5.5, the AF_VSOCK core supported only one transport loaded at run time. That was a limit for nested VMs, because we need multiple transports loaded together.

Types of transport

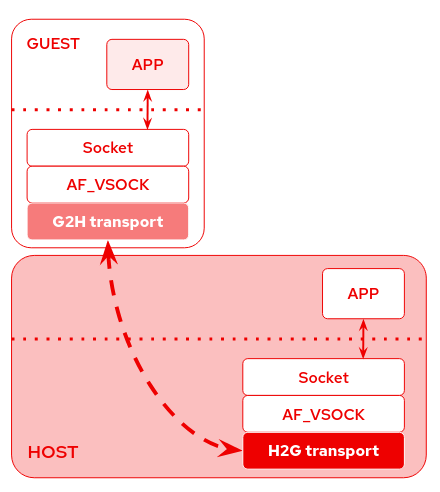

Under the AF_VSOCK core, that provides the socket interface to the user space applications, we have several transports that implement the communication channel between guest and host.

These transports depend on the hypervisor and we can put them in two groups:

- H2G (host to guest) transports: they run in the host and usually they provide the device emulation; currently we have vhost and vmci transports.

- G2H (guest to host) transports: they run in the guest and usually they are device drivers; currently we have virtio, vmci, and hyperv transports.

Multi-transports

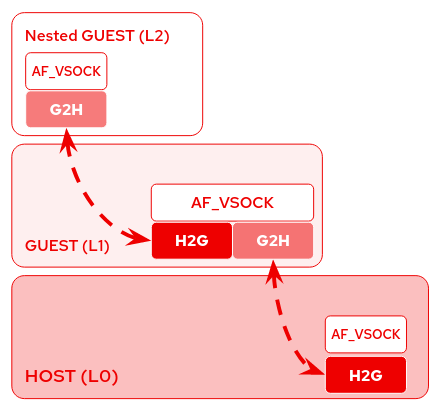

In a nested VM environment, we need to load both G2H and H2G transports together in the L1 guest, for this reason, we implemented the multi-transports support to use vsock through nested VMs.

Starting from Linux 5.5, the AF_VSOCK can handle two types of transports loaded together at runtime:

- H2G transport, to communicate with the guest

- G2H transport, to communicate with the host.

So in the QEMU/KVM environment, the L1 guest will load both virtio-transport, to communicate with L0, and vhost-transport to communicate with L2.

Local Communication

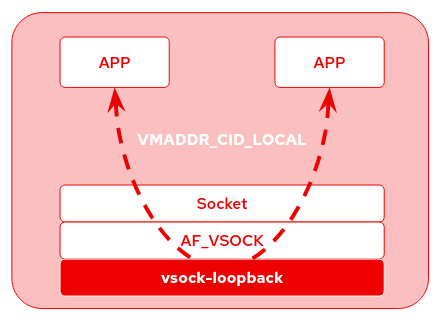

Another feature recently added is the possibility to communicate locally on the same host. This feature, suggested by Richard WM Jones, can be very useful for testing and debugging applications that use AF_VSOCK without running VMs.

Linux 5.6 introduces a new transport called vsock-loopback, and a new well know CID for local communication: VMADDR_CID_LOCAL (1). It’s a special CID to direct packets to the same host that generated them.

Other CIDs can be used for the same purpose, but it’s preferable to use VMADDR_CID_LOCAL:

- Local Guest CID

- if G2H is loaded (e.g. running in a VM)

- VMADDR_CID_HOST (2)

- if H2G is loaded and G2H is not loaded (e.g. running on L0). If G2H is also loaded, then VMADDR_CID_HOST is used to reach the host

Richard recently used the vsock local communication to implement a regression test test for nbdkit/libnbd vsock support, using the new VMADDR_CID_LOCAL.

Example

# Listening on port 1234 using ncat(1)

l0$ nc --vsock -l 1234

# Connecting to the local host using VMADDR_CID_LOCAL (1)

l0$ nc --vsock 1 1234

Patches

- [PATCH net-next v2 00/15] vsock: add multi-transports support

- [PATCH net-next v2 0/6] vsock: add local transport support